Instagram Will Automatically Give Fact-Checking in Order to Reduce False Information

In an attempt to fight misinformation spread through photos and videos, Instagram is adding a “False Information” warning to the platform. Using a combination of user reporting and automation, suspected content will be sent to independent fact-checkers for review and flagged as “false” if it turns out to be fake.

Instagram is expanding its limited fact-checking test in the U.S. from May and will now work with 45 third-party organizations to assess the truthfulness of photo and video content on its app.

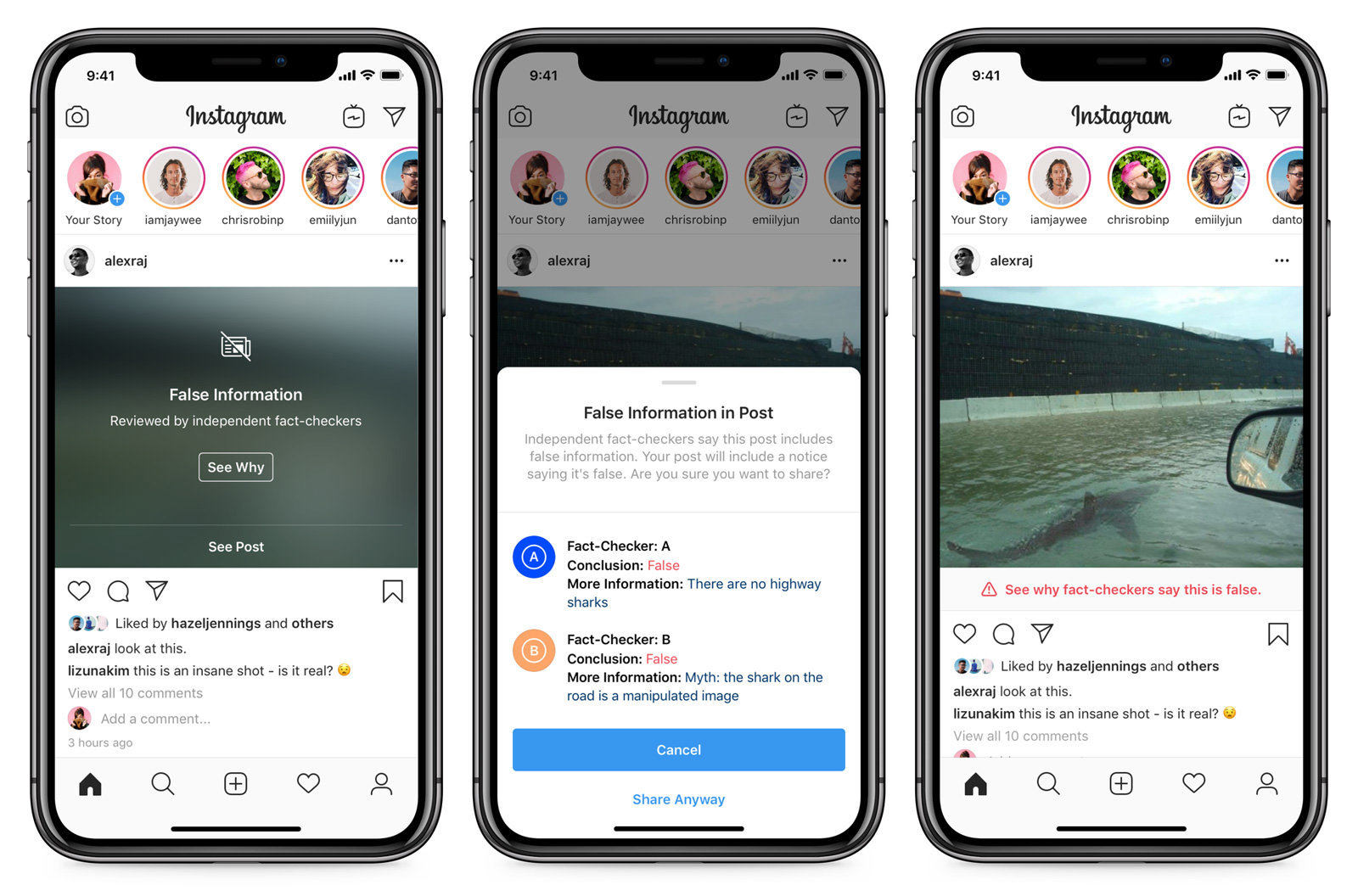

According to Instagram, the content will be flagged as potentially fake by “a combination of feedback from our community and technology.” Then, if third-party independent fact-checkers identify that the content is indeed fake, three things happen:

- The photo or video is removed from Explore and Hashtag pages to “reduce its distribution”

- The photo is clearly labeled “False Information” in an overlay that doesn’t even let you look at the photo or video yet.

- Anybody who tries to post that same photo will receive a warning that they are about to share false information.

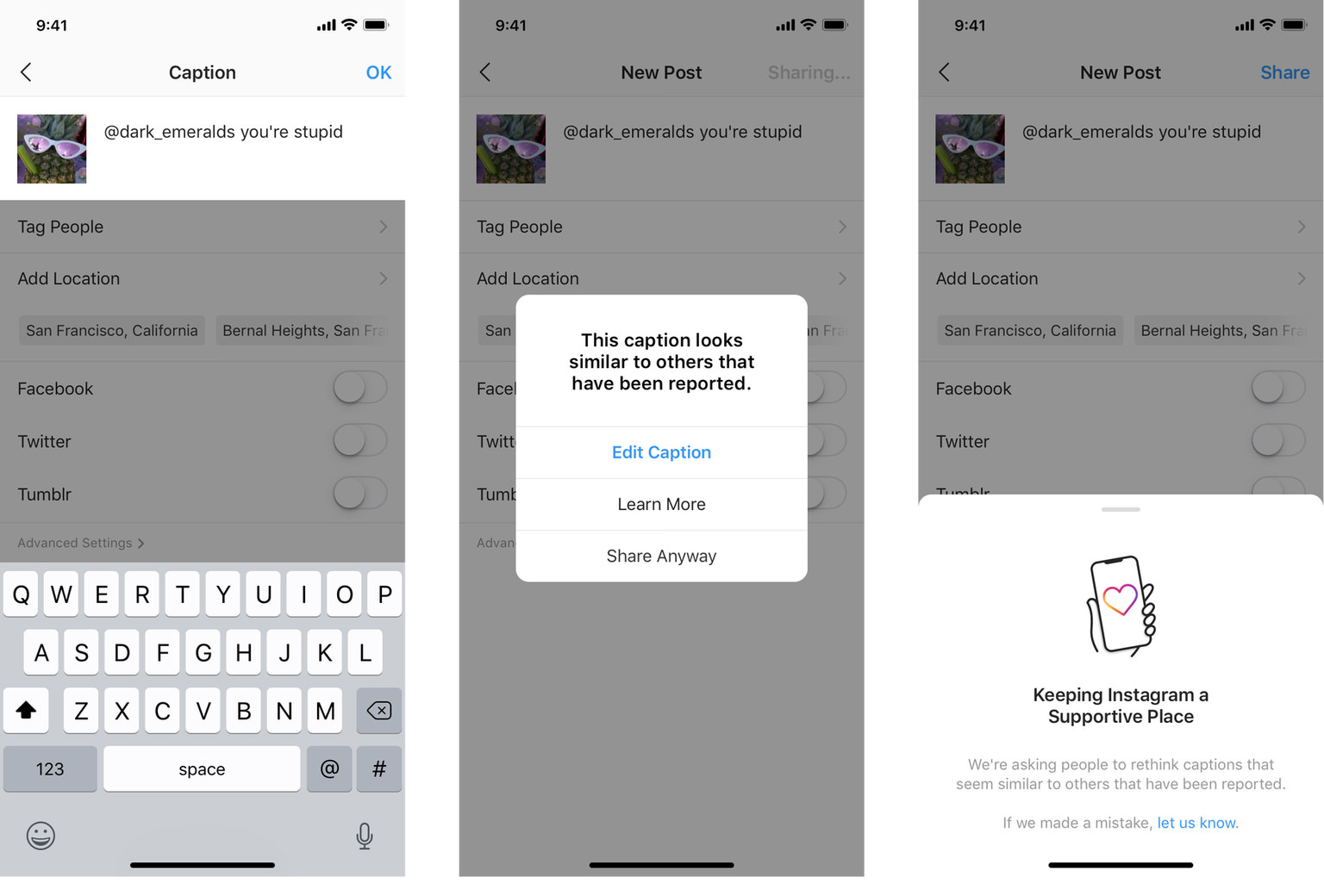

Instagram is trying to beef up its safety practices across the board. Today it began alerting users that the caption they’re about to post on a photo or video could be offensive or seen as bullying, offering them a chance to edit the text before they post it. Instagram started doing the same for comments earlier this year.

One group that’s exempt from the fact-checking, though, is politicians. Their original content on Instagram, including ads, will not be sent for fact checks, even if it’s blatantly inaccurate.

Instagram CEO Adam Mosseri has maintained that banning political ads could hurt challenger candidates in need of promotion and that it would be tough to draw the lines between political and issue ads.

More info on Instagram’s Info Center.